There is a great effort in statistical physics in trying to understand phase transitions. A phase transition is characterized by a strong change in the properties of a material, such as a sudden change in magnetization, or specific heat. We are going to see a simple argument which shows some systems must have phase transitions, while others can’t. It is a well known argument due to Rudolph Peierls.

To begin, we have to understand what happens to a system when it is in contact with a reservoir of fixed temperature. Maybe it tries to minimize its internal energy? Or maximize its entropy? What really happens can be seen as a balance between these two principles, in fact it corresponds to minimizing the Helmholtz free energy. This is a thermodynamic potential given in terms of the internal energy and the entropy

(at a fixed temperature

) by

.

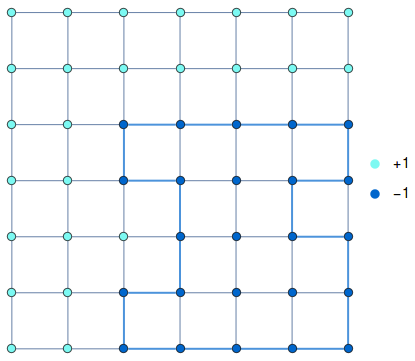

The equilibrium state is that which minimizes the Helmholtz free energy and has the same temperature as the reservoir. Let’s see how this idea applies to a simple and paradigmatic system of statistical mechanics: the Ising model. We have a square lattice, and at each site we place a spin variable, which can have a value of +1 or -1. That is, each spin may point up or down.

The energy of this system is given by its Hamiltonian. We imagine every spin only interacts with its first neighbors, and the interaction energy is smaller when both spins point in the same direction. A Hamiltonian with these features is

is a positive quantity standing for the interaction energy between spins, and each variable

represents a spin at position

. When both are in the same direction, their contribution to the energy is negative, hence it diminishes the total energy. But when neighbor spins are in opposite directions they raise the total energy. Finally,

is an external magnetic field at site

.

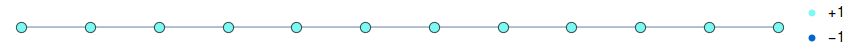

Now we want to find out the state of minimum free energy, beginning with a 1D system. Let’s start with a lattice with all spins pointing up, this would be an equilibrium state if the system were at zero temperature (all neighbor spins are pointing in the same direction, so the energy is the least possible).

If we invert a strip of spins of size , including a certain spin

, this raises the internal energy of the system by

. But this change in energy does not depend on the size of the domain of down spins we created.

To account for the entropy difference, we have to count in how many ways this configuration can be arranged. The answer is simple, there are only ways to make this domain of flipped spins which include spin

. The change in entropy is given by the Boltzmann formula

,

where is the number of ways the system can be arranged, which in this case is simply

, and

is Boltzmann constant.

Then creating this domain results in a change in free energy of , and if the domain is large enough, the second term will always dominate, for it can easily be larger than the first. From this we can see that creating a domain with flipped spins favors the free energy, by lowering it. The system will go to the new state with lower free energy, thus accommodating the created subdomain. Other domains like this are created by thermal fluctuations and eventually disorder the whole system.

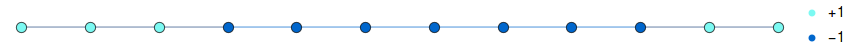

Then, in one dimension, disorder always dominates because it lowers the Helmholtz free energy. But in two dimensions the picture is very different. We again start with a completely ordered lattice with spins pointing up

and form an island of down spins. This island will be of perimeter .

There are misaligned spins all along the border of this region and each spin at the border contributes to a difference of in the internal energy. But only these spins contribute to a change in energy. Hence the total energy difference is proportional to the perimeter:

.

The numbers of ways to build an island which includes a fixed spin again is how we compute the change in entropy due to the flipped domain. This number is roughly

, where

is a number less than 3, as we are going to see. The change in entropy is then

and finally the change in free energy is

.

This quantity is linear in L and can be either positive or negative, depending on the temperature the system is in. At low temperatures, is positive, and the formation of flipped domains is not favorable, thus the system remains ordered. Whereas at high temperatures, these domains reduce the Helmholtz free energy and disorder wins. The temperature where the change from one behavior to the other happens is called the critical temperature, and is of the order

.

This argument illustrates the idea of a lower critical dimension, which is for the Ising model and other similar systems (systems with local interactions and with a discrete symmetry). This means no ordered phase may exist at or below this critical dimension, only above it.

A little more about

Let’s now investigate the number , which I argued is less than 3. We wanted to draw a closed path of length

on a square lattice. Beginning at position

, there are 4 options of directions to go. We may choose the up direction, for instance.

At the next site, we can’t go back, so there are only 3 options left. For most successive steps, there are only 3 options, or less. Because the path is closed, and we have to be back at the original site after steps.

At some point, the number of options decreases, forcing us to go back to site . Hence, the number of paths is bounded by

, and we can say it is

. Mathematically, there is a well defined limit for

as the number of steps

approaches infinity, and there are several computational efforts to find this limit with greater precision and enumerate the exact number of possible paths at larger values of

.

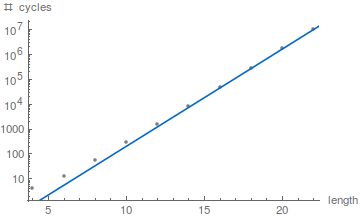

The number of possible paths of length is an integer sequence available at the OEIS, and we can try to fit the available values to an exponential curve to find the value of

. The figure below shows this fit for the few values the list provides, and we can already see a curve very close to an exponential (which is a straight line in a log-linear plot). The value found for

is 2.45.

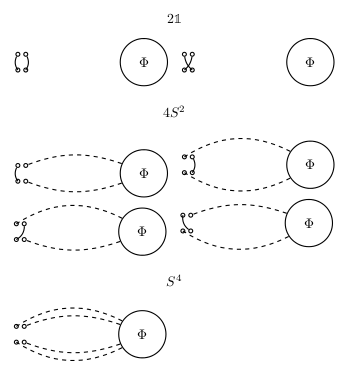

Recent work on the subject concentrates on enumerating the number of polygons of a certain perimeter and with a fixed site. This is a little different from the problem we are studying, which is the number of cycles of a certain perimeter, rather than polygons. The difference is that polygons are not oriented, neither have a special vertex which is the starting point. In the figure below, we see all polygons of perimeter 8, for instance.

But these two problems are closely related: If is the number of cycles of lenght

and

is the number of polygons of same length, they are connected by

.

This formula can be easily proved: In the polygon of length , any of its sites can be the starting site for a cycle, hence the factor

, and for every starting position there are two directions to follow, clockwise or counter clockwise, and we get the factor 2. The number we are seeking is the number of cycles

.

Nowadays we know the number of cycles grows as approximately

and the best values for these quantities are and

. So we see

is indeed less than 3, as observed. This is a much more precise value than the naive estimation of 2.45 we did.

Some references

Herbert B. Callen, “Thermodynamics and an Introduction to Thermostatistics” (1998)

If you want more details on why we minimize the Helmholtz free energy .

John Cardy, “Scaling and renormalization in statistical physics, Vol. 5” Cambridge university press (1996)

If you want to go beyond the Peierls argument.

Iwan Jensen and Anthony J. Guttmann, “Self-avoiding polygons on the square lattice”, Journal of Physics A: Mathematical and General 32.26 (1999)

To know more about the numerical study on the value of .

The OEIS

Has sequences for both the number of cycles and of polygons on the square lattice.

Source code

The source code is provided on Github under the MIT license.